1.2 Installing the Orchestration Server to a SLES 11 Pacemaker Cluster Environment

This section includes information to help you install Orchestration Server components in a high availability SLES 11 SP2 environment. The sequence below is the supported method for configuring this environment.

You also need to install and configure the Orchestration Agent for the SLES 11 SP2 High Availability Extension (Pacemaker) cluster environment. You can find information to help you do this in the NetIQ Cloud Manager 2.1.5 SUSE Xen VM High Availability Configuration Guide.

NOTE:Upgrading from earlier versions of Cloud Manager Orchestration to a high availability environment is supported. For more information, see Upgrading Cloud Manager Orchestration Components to Cloud Manager Orchestration Components

in the NetIQ Cloud Manager Orchestration Components Upgrade Guide.

1.2.1 Meeting the Prerequisites

The environment where the Orchestration Server is installed must meet the hardware and software requirements for high availability. This section includes the following information to help you understand those requirements.

Hardware Requirements for Creating a High Availability Environment

The following hardware components are required for creating a high availability environment for the Orchestration Server:

-

A minimum of two SLES 11 SP2 physical servers, each having dual network interface cards (NICs). These servers are the nodes of the cluster where the Orchestration Server is installed and are a key part of the high availability infrastructure.

-

A Fibre Channel or ISCSI Storage Area Network (SAN) or network storage

-

A STONITH device to provide node fencing. A STONITH device is a power switch that the cluster uses to reset nodes that are considered unresponsive. Resetting non-heartbeating nodes is the only reliable way to ensure that no data corruption is caused by nodes that hang and only appear to be dead. For more information about setting up STONITH, see,

Fencing and STONITH

in the SLES 11 High Availability Guide.

Software Requirements for Creating a High Availability Environment

The following software components are required for creating a high availability environment for the Cloud Manager Orchestration Server:

-

The high availability pattern on the SLES 11 SP2 High Availability Environment (HAE) RPM install source, available for download in a 32-bit version and in a 64-bit version.

The SLES 11 source includes Oracle Cluster File System 2 (OCFS2), a parallel cluster file system that offers concurrent access to a shared file system. See Section 1.2.4, Setting Up OCFS2 on SLES 11 SP2 for more information.

SLES 11 SP2 HAE integrates these open source storage technologies (Pacemaker and OCFS) in a high availability installation pattern, which, when installed and configured, is known as the High Availability Storage Infrastructure. This combined technology automatically shares cluster configuration and coordinates cluster-wide activities to ensure predictable administration of storage resources for shared-disk-based clusters.

-

The Pacemaker software package, which is a high availability resource manager that supports multinode failover. This should include all available online updates installed to all nodes that will be part of the Pacemaker cluster. You can download this cluster resource manager at the Pacemaker project download site.

-

DNS is installed on the nodes of the cluster for resolving the cluster hostname to the cluster IP.

-

The Orchestration Server is installed on all nodes of the cluster. A two-node or three-node configuration is recommended.

-

(Optional) The Cloud manager Monitoring Server installed on a non-clustered server.

1.2.2 Installing the SLES 11 SP2 High Availability Pattern

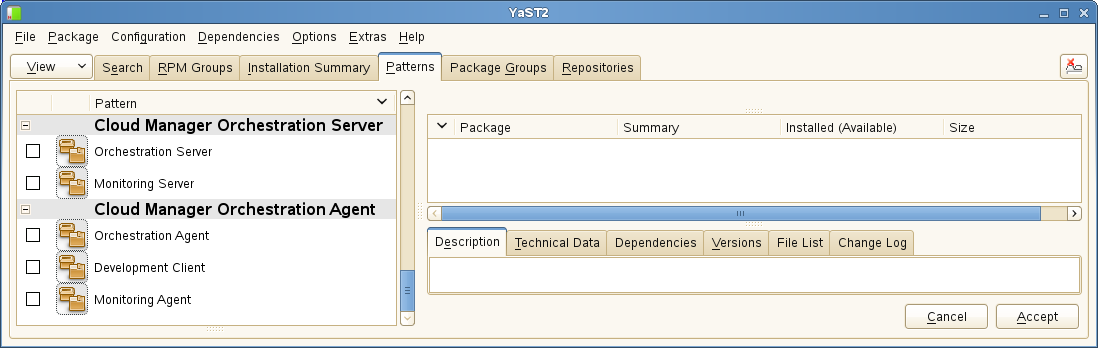

The High Availability Environment ISO install pattern is included in the distribution of the SLES HAE 11 SP2 ISO. Use YaST2 (or the command line, if you prefer) to install the packages that are associated with the high availability pattern to each physical node that is to participate in the Orchestration Server cluster.

NOTE:The high availability pattern is included on the SLES HAE 11 SP2 install source, not the Cloud Manager install source.

The packages associated with high availability include:

-

drbd (Distributed Replicated Block Device)

-

EVMS high availability utilities

-

The Pacemaker subsystem for high availability on SLES

-

The Pacemaker CIM provider

-

A monitoring daemon for maintaining high availability resources that can be used by Pacemaker

-

A plug-in and interface loading library used by Pacemaker

-

An interface for the STONITH device

-

OCFS2 GUI tools

-

OCFS2 Core tools

The packages that must be installed, at a minimum, include:

-

OCFS2-tools-o2cb

-

yast2-cluster

-

libglue-devel

-

sle-hae-release

All other dependencies are installed by default.

For more information, see Installation and Basic Setup with YaST

in the SUSE Linux Enterprise High Availability Extension Administration Guide.

1.2.3 Configuring SLES 11 Nodes with Time Synchronization and Installing Pacemaker to Each Node

When you have installed the high availability packages to each node of the cluster, you need to configure the Network Timing Protocol (NTP) and Pacemaker clustering environment on each physical machine that participates in the cluster.

Configuring Time Synchronization

To configure time synchronization, you need to configure the nodes in the cluster to synchronize to a time server outside the cluster. The cluster nodes use the time server as their time synchronization source.

NTP is included as a network service in SLES HAE 11 SP2. Use the Time Synchronization with NTP instructions in the SUSE Linux Enterprise Server 11 High Availability Extension Administration Guide to help you configure each cluster node with NTP.

Configuring Pacemaker

Pacemaker Cluster Resource Manager is an open source server clustering system that ensures high availability and manageability of critical network resources including data, applications, and services. It is a multinode clustering product for Linux that supports failover, failback, and migration (load balancing) of individually managed cluster resources.

Pacemaker packages are installed with the high availability pattern on the SLES HAE 11 SP2 install source. For detailed information about configuring Pacemaker, see Configuring and Managing Cluster Resources (GUI) in the SLES 11 High Availability Guide.

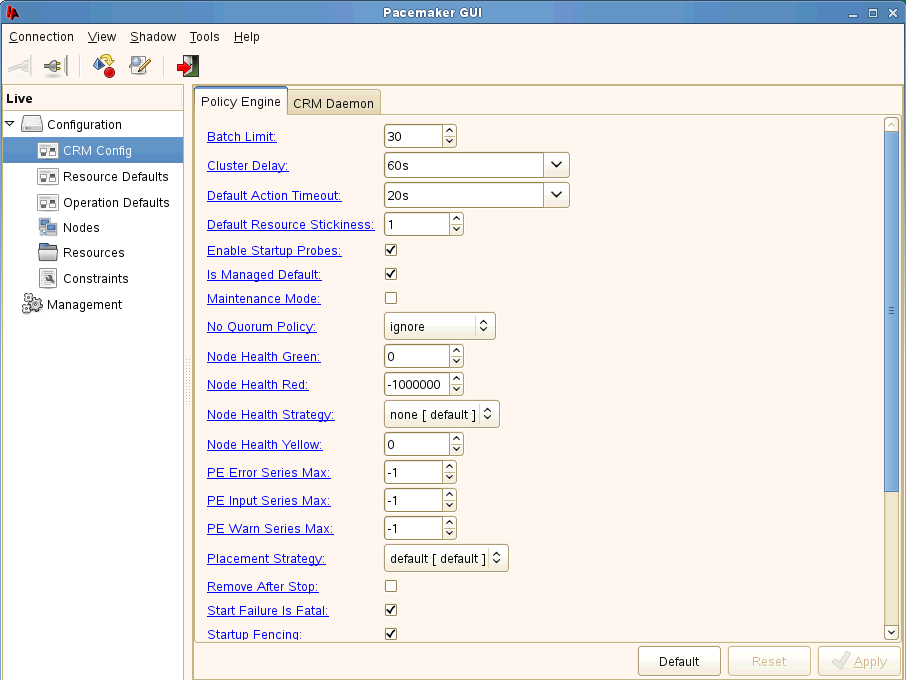

An important value you need to specify in order for Pacemaker to be enabled for high availability is configured in the field on the settings page of the Pacemaker console.

Figure 1-2 The Main Settings Page in the Pacemaker Graphical Interface

The value in this field controls how long Pacemaker waits for services to start. The default value is 20 seconds. The Orchestration Server requires more time than this to start. We recommend that you specify the value in this field at 120s. More time might be required if your Orchestration Server grid is very large.

1.2.4 Setting Up OCFS2 on SLES 11 SP2

OCFS2 is a general-purpose journaling file system that is fully integrated in the Linux 2.6 and later kernel that ships with SLES 11 SP2. OCFS2 allows you to store application binary files, data files, and databases on devices using network storage. All nodes in a cluster have concurrent read and write access to the file system. A distributed lock manager helps prevent file access conflicts. OCFS2 supports up to 32,000 subdirectories and millions of files in each directory. The O2CB cluster service (a driver) runs on each node to manage the cluster.

To set up the high availability environment for the Orchestration Server, you need to first install the High Availability pattern in YaST (this includes the ocfs2-tools-o2cb and ocfs2console software packages) and configure the Pacemaker cluster management system on each physical machine that participates in the cluster, and then provide network storage with OCFS2 where the Orchestration files can be stored. For information on setting up and configuring OCFS2, see “Oracle Cluster File System 2” in the SLES 11 High Availability Guide.

Shared Storage Requirements for Creating a High Availability Environment

The High Availability Extension available in SLES 11 SP2 supports Fibre Channel or iSCSI storage area networks (SANs).

SAN configuration is beyond the scope of this document. For information about setting up a SAN, see the Oracle Cluster File System 2 documentation in the SLES 11 High Availability Guide.

IMPORTANT:The Cloud Manager Orchestration Server requires a specific mount point for file storage on the SAN. Use /zos for this mount point.

1.2.5 Installing the Orchestration Server on the First Clustered SLES 11 Node

NOTE:As you prepare to install the Cloud Manager Orchestration Server and use it in a high availability environment, make sure that the requirements to do so are met. For more information, see Cloud Manager Orchestration Server Requirements

in the NetIQ Cloud Manager 2.1.5 Installation Planning Guide.

To install the Orchestration Server packages on the first node of the cluster:

-

Log in to the target SLES 11 server as root, then open YaST2.

-

Download the appropriate NetIQ Cloud Manager ISO to the SLES server.

or

Load the NetIQ Cloud Manager DVD on the SLES server.

-

Define the NetIQ Cloud Manager ISO or DVD as an add-on product:

-

In the YaST Control Center, click > then click .

-

Click Add, select or , then follow the prompts to add the product.

-

-

Read and accept the license agreement, then click to display the Software Selection and System Tasks dialog box.

-

Select the Orchestration Server installation pattern for installation on the first node., then click .

When you select this pattern, the Monitoring Server installation pattern and the Monitoring Agent pattern are also selected. These patterns are the gateway between enterprise applications and resource servers. The Orchestration Server manages computing nodes (resources) and the jobs that are submitted from applications to run on these resources.

HINT:If they are not already selected by default, you need to select the packages that are in the Orchestration Server pattern, the Monitoring Server pattern, and the Monitoring Client pattern.

-

Some additional packages that resolve the Orchestration Server dependencies are listed in an Automatic Changes dialog box.

Packages are written to your server.

-

When the package installation is complete, click .

-

Configure the Orchestration Server components that you have installed. You can use one of two methods to perform the configuration:

-

The Orchestration components (text-based) configuration script.

-

The Orchestration components GUI Configuration Wizard, which might be more user-friendly.

HINT:Although the text-based configuration process detects which RPM patterns are installed, the GUI Configuration Wizard requires that you specify which components are to be configured.

-

-

Finish the configuration by following the instructions in Checking the Configuration.