A.3 Installation and Configuration

This section provides the steps for installing and configuring Sentinel in an HA environment. Each step describes the general approach, then refer to a demo setup that documents the details of an exemplary cluster solution.

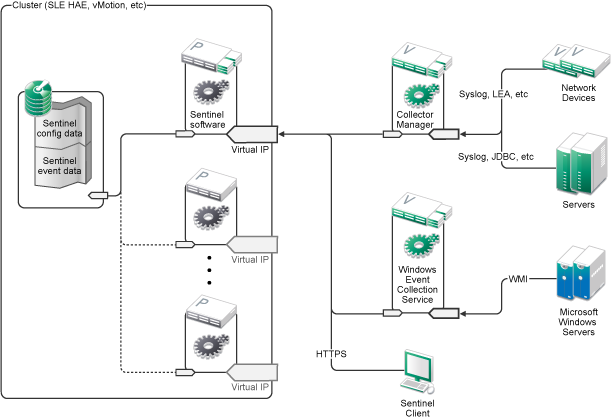

The following diagram represents an active-passive HA architecture:

A.3.1 Initial Setup

Configure the machine hardware, network hardware, storage hardware, operating systems, user accounts, and other basic system resources per the documented requirements for Sentinel and local customer requirements. Test the systems to ensure proper function and stability.

-

As a best practice, all cluster nodes should be time-synchronized - use NTP or a similar technology for this purpose.

-

The cluster will require reliable hostname resolution. As a best practice, you may wish to enter all internal cluster hostnames into the /etc/hosts file to ensure cluster continuity in case of DNS failure. If any cluster node cannot resolve all other nodes by name, the cluster configuration described in this section will fail.

-

The CPU, RAM, and disk space characteristics for each cluster node must meet the system requirements defined in Section 5.0, Meeting System Requirements based on the expected event rate.

-

The disk space and I/O characteristics for the storage nodes must meet the system requirements defined in Section 5.0, Meeting System Requirements based on the expected event rate and data retention policies for primary and secondary storage.

-

If you want to configure the operating system firewalls to restrict access to Sentinel and the cluster, refer to Section 8.0, Ports Used for details of which ports must be available depending on your local configuration and the sources that will be sending event data.

The exemplary solution will use the following configuration:

-

(Conditional) For traditional HA installations:

-

Two SUSE Linux 11 SP3 cluster node VMs.

-

The OS install need not install X Windows, but can if Graphical User Interface configuration is desired. The boot scripts can be set to start without X (runlevel 3), which can then be started only when needed.

-

-

(Conditional) For HA appliance installations: Two HA ISO appliance based cluster node VMs. For information about installing the HA ISO appliance, see Section 13.3.2, Installing Sentinel.

-

The nodes will have two NICs: one for external access and one for iSCSI communications.

-

Configure the external NICs with IP addresses that allow for remote access through SSH or similar. For this example, we will use 172.16.0.1 (node01) and 172.16.0.2 (node02).

-

Each node should have sufficient disk for the operating system, Sentinel binaries and configuration data, cluster software, temp space, and so forth. See the SUSE Linux and SLE HAE system requirements, and Sentinel application requirements.

-

One SUSE Linux 11 SP3 VM configured with iSCSI Targets for shared storage

-

The OS install need not install X Windows, but can if Graphical User Interface configuration is desired. The boot scripts can be set to start without X (runlevel 3), which can then be started only when needed.

-

The system will have two NICs: one for external access and one for iSCSI communications.

-

Configure the external NIC with an IP address that allows for remote access using SSH or similar. For example, 172.16.0.3 (storage03).

-

The system should have sufficient space for the operating system, temp space, a large volume for shared storage to hold Sentinel data, and a small amount of space for an SBD partition. See the SUSE Linux system requirements, and Sentinel event data storage requirements.

-

NOTE:In a production cluster, you can use internal, non-routable IPs on separate NICs (possibly a couple, for redundancy) for internal cluster communications.

A.3.2 Shared Storage Setup

Set up your shared storage and make sure that you can mount it on each cluster node. If you are using FibreChannel and a SAN, this may involve physical connections and other configuration. The shared storage will be used to hold Sentinel's databases and event data, so must be sized accordingly for the customer environment based on the expected event rate and data retention policies.

A typical implementation might use a fast SAN attached using FibreChannel to all the cluster nodes, with a large RAID array to store the local event data. A separate NAS or iSCSI node might be used for the slower secondary storage. As long as the cluster node can mount the primary storage as a normal block device, it can be used by the solution. The secondary storage can also be mounted as a block device, or could be an NFS or CIFS volume.

NOTE:You should configure your shared storage and test mounting it on each cluster node, but the actual mount of the storage will be handled by the cluster configuration.

For the exemplary solution, we will use iSCSI Targets hosted by a SUSE Linux VM:

The exemplary solution uses iSCSI Targets configured on a SUSE Linux VM. The VM is storage03 as listed in Initial Setup. iSCSI devices can be created using any file or block device, but for simplicity here we will use a file that we create for this purpose.

Connect to storage03 and start a console session. Use the dd command to create a blank file of any desired size for Sentinel primary storage:

dd if=/dev/zero of=/localdata count=10240000 bs=1024

In this case, we create a 10GB file filled with zeros (copied from the /dev/zero pseudo-device). See the info or man page for dd for details on the command-line options. For example, to create different-sized "disks". The iSCSI Target treats this file as if it were a disk; you could of course use an actual disk if you prefer.

Repeat this procedure to create a file for secondary storage:

dd if=/dev/zero of=/networkdata count=10240000 bs=1024

For this example we use two files ("disks") of the same size and performance characteristics. For a production deployment, you might put the primary storage on a fast SAN and the secondary storage on a slower iSCSI, NFS, or CIFS volume.

Configuring iSCSI Targets

Configure the files localdata and networkdata as iSCSI Targets:

-

Run YaST from the command line (or use the Graphical User Interface, if preferred): /sbin/yast

-

Select Network Devices > Network Settings.

-

Ensure that the Overview tab is selected.

-

Select the secondary NIC from the displayed list, then tab forward to Edit and press Enter.

-

On the Address tab, assign a static IP address of 10.0.0.3. This will be the internal iSCSI communications IP.

-

Click Next, then click OK.

-

On the main screen, select Network Services > iSCSI Target.

-

If prompted, install the required software (iscsitarget RPM) from the SUSE Linux 11 SP3 media.

-

Click Service, select the When Booting option to ensure that the service starts when the operating system boots.

-

Click Global, and then select No Authentication because the current OCF Resource Agent for iSCSI does not support authentication.

-

Click Targets and then click Add to add a new target.

The iSCSI Target will auto-generate an ID and then present an empty list of LUNs (drives) that are available.

-

Click Add to add a new LUN.

-

Leave the LUN number as 0, then browse in the Path dialog (under Type=fileio) and select the /localdata file that you created. If you have a dedicated disk for storage, specify a block device, such as /dev/sdc.

-

Repeat steps 12 and 13, and add LUN 1 and /networkdata this time.

-

Leave the other options at their defaults. Click OK and then click Next.

-

Click Next again to select the default authentication options, then Finish to exit the configuration. Accept if asked to restart iSCSI.

-

Exit YaST.

The above procedure exposes two iSCSI Targets on the server at IP address 10.0.0.3. At each cluster node, ensure that it can mount the local data shared storage device.

Configuring iSCSI Initiators

You must also format the devices (once) to utilize them.

-

Connect to one of the cluster nodes (node01) and start YaST.

-

Select Network Devices > Network Settings.

-

Ensure that the Overview tab is selected.

-

Select the secondary NIC from the displayed list, then tab forward to Edit and press Enter.

-

Click Address, assign a static IP address of 10.0.0.1. This will be the internal iSCSI communications IP.

-

Select Next, then click OK.

-

Click Network Services > iSCSI Initiator.

-

If prompted, install the required software (open-iscsi RPM) from the SUSE Linux 11 SP3 media.

-

Click Service, select When Booting to ensure the iSCSI service is started on boot.

-

Click Discovered Targets, and select Discovery.

-

Specify the iSCSI Target IP address (10.0.0.3), select No Authentication, and then click Next.

-

Select the discovered iSCSI Target with the IP address 10.0.0.3 and then select Log In.

-

Switch to automatic in the Startup drop-down and select No Authentication, then click Next.

-

Switch to the Connected Targets tab to ensure that we are connected to the target.

-

Exit the configuration. This should have mounted the iSCSI Targets as block devices on the cluster node.

-

In the YaST main menu, select System > Partitioner.

-

In the System View, you should see new hard disks (such as /dev/sdb and /dev/sdc) in the list - they will have a type of IET-VIRTUAL-DISK. Tab over to the first one in the list (which should be the primary storage), select that disk, then press Enter.

-

Select Add to add a new partition to the empty disk. Format the disk as a primary ext3 partition, but do not mount it. Ensure that the option Do not mount partition is selected.

-

Select Next, then Finish after reviewing the changes that will be made. Assuming you create a single large partition on this shared iSCSI LUN, you should end up with a /dev/sdb1 or similar formatted disk (referred to as /dev/<SHARED1> below).

-

Go back into the partitioner and repeat the partitioning/formatting process (steps 16-19) for /dev/sdc or whichever block device corresponds to the secondary storage. This should result in a /dev/sdc1 partition or similar formatted disk (referred to as /dev/<NETWORK1> below).

-

Exit YaST.

-

(Conditional) If you are performing a traditional HA installation, create a mountpoint and test mounting the local partition as follows (the exact device name might depend on the specific implementation):

# mkdir /var/opt/novell # mount /dev/<SHARED1> /var/opt/novell

You should be able to create files on the new partition and see the files wherever the partition is mounted.

-

(Conditional) If you are performing a traditional HA installation, to unmount:

# umount /var/opt/novell

Repeat steps 1-15 (for HA appliance installations) or steps 1-15, 22, and 23 (for traditional HA installations) in the procedure above to ensure that each cluster node can mount the local shared storage. Replace the node IP in step 5 with a different IP for each cluster node (e.g. node02 > 10.0.0.2).

A.3.3 Sentinel Installation

There are two options to install Sentinel: install every part of Sentinel onto the shared storage (using the --location option to redirect the Sentinel install to wherever you have mounted the shared storage) or only the variable application data on the shared storage.

In this exemplary solution, we will follow the latter approach and install Sentinel to each cluster node that can host it. The first time Sentinel is installed we will do a complete installation including the application binaries, configuration, and all the data stores. Subsequent installations on the other cluster nodes will only install the application, and will assume that the actual Sentinel data will be available some time later (e.g. once the shared storage is mounted).

Exemplary Solution:

In this exemplary solution we install (for traditional HA installations) or configure (for HA appliance installations) Sentinel to each cluster node, storing only the variable application data on shared storage. This keeps the application binaries and configuration in standard locations, allows us to verify the RPMs, and also allows us to support warm patching in certain scenarios.

First Node Installation

Traditional HA Installation

-

Connect to one of the cluster nodes (node01) and open a console window.

-

Download the Sentinel installer (a tar.gz file) and store it in /tmp on the cluster node.

-

Execute the following commands:

mount /dev/<SHARED1> /var/opt/novell

cd /tmp

tar -xvzf sentinel_server*.tar.gz

cd sentinel_server*

./install-sentinel --record-unattended=/tmp/install.props

-

Run through the standard installation, configuring the product as appropriate. The installation program installs the binaries, databases, and configuration files. The installation program also sets up the login credentials, configuration settings, and the network ports.

-

Start Sentinel and test the basic functions. You can use the standard external cluster node IP to access the product.

-

Shut down Sentinel and dismount the shared storage using the following commands:

rcsentinel stop

umount /var/opt/novell

This step removes the autostart scripts so that the cluster can manage the product.

cd /

insserv -r sentinel

Sentinel HA Appliance Installation

The Sentinel HA appliance includes the Sentinel software that is already installed and configured. To configure the Sentinel software for HA, perform the following steps:

-

Connect to one of the cluster nodes (node01) and open a console window.

-

Navigate to the following directory:

cd /opt/novell/sentinel/setup

-

Record the configuration:

-

Execute the following command:

./configure.sh --record-unattended=/tmp/install.props --no-start

This step records the configuration in the file install.props,which is required to configure the cluster resources using the install-resources.sh script.

-

Specify the option to select the Sentinel Configuration type.

-

Specify 2 to enter a new password.

If you specify 1, the install.props file does not store the password.

-

-

Shut down Sentinel using the following command:

rcsentinel stop

This step removes the autostart scripts so that the cluster can manage the product.

insserv -r sentinel

-

Move the Sentinel data folder to the shared storage using the following commands. This movement allows the nodes to utilize the Sentinel data folder through shared storage.

mkdir -p /tmp/new

mount /dev/<SHARED1>/tmp/new

mv /var/opt/novell/sentinel/tmp/new

umount /tmp/new/

-

Verify the movement of the Sentinel data folder to the shared storage using the following commands:

mount /dev/<SHARED1> /var/opt/novell/

umount /var/opt/novell/

Subsequent Node Installation

Repeat the installation on other nodes:

The initial Sentinel installer creates a user account for use by the product, which uses the next available user ID at the time of the install. Subsequent installs in unattended mode will attempt to use the same user ID for account creation, but the possibility for conflicts (if the cluster nodes are not identical at the time of the install) does exist. It is highly recommended that you do one of the following:

-

Synchronize the user account database across cluster nodes (manually through LDAP or similar), making sure that the sync happens before subsequent installs. In this case the installer will detect the presence of the user account and use the existing one.

-

Watch the output of the subsequent unattended installs - a warning will be issued if the user account could not be created with the same user ID.

Traditional HA Installation

-

Connect to each additional cluster node (node02) and open a console window.

-

Execute the following commands:

cd /tmp

scp root@node01:/tmp/sentinel_server*.tar.gz

scp root@node01:/tmp/install.props

tar -xvzf sentinel_server*.tar.gz

./install-sentinel --no-start --cluster-node --unattended=/tmp/install.props

cd /

insserv -r sentinel

Sentinel HA Appliance Installation

-

Connect to each additional cluster node (node02) and open a console window.

-

Execute the following command:

insserv -r sentinel

-

Stop Sentinel services.

rcsentinel stop

-

Remove Sentinel directory.

rm -rf /var/opt/novell/sentinel

At the end of this process, Sentinel should be installed on all nodes, but it will likely not work correctly on any but the first node until various keys are synchronized, which will happen when we configure the cluster resources.

A.3.4 Cluster Installation

You need to install the cluster software only for traditional HA installations. The Sentinel HA appliance includes the cluster software and does not require manual installation.

Install the cluster software on each node, and register each cluster node with the cluster manager. Procedures to do so will vary depending on the cluster implementation, but at the end of the process each cluster node should show up in the cluster management console.

For our exemplary solution, we will set up SUSE Linux High Availability Extension and overlay that with Sentinel-specific Resource Agents:

If you do not use the OCF Resource Agent to monitor Sentinel, you will likely have to develop a similar monitoring solution for the local cluster environment. The OCF Resource Agent for Sentinel is a simple shell script that runs a variety of checks to verify if Sentinel is functional. If you wish to develop your own, you should examine the existing Resource Agent for examples (the Resource Agent is stored in the Sentinelha.rpm in the Sentinel download package.)

There are many different ways in which a SLE HAE cluster can be configured, but we will select options that keep it fairly simple. The first step is to install the core SLE HAE software; the process is fully detailed in the SLE HAE Documentation. For information about installing SLES add-ons, see the Deployment Guide.

You must install the SLE HAE on all cluster nodes, node01 and node02 in our example. The add-on will install the core cluster management and communications software, as well as many Resource Agents that are used to monitor cluster resources.

Once the cluster software has been installed, an additional RPM should be installed to provide the additional Sentinel-specific cluster Resource Agents. The HA RPM can be found in the novell-Sentinelha-<Sentinel_version>*.rpm stored in the normal Sentinel download, which you unpacked to install the product.

On each cluster node, copy the novell-Sentinelha-<Sentinel_version>*.rpm into the /tmp directory, then:

cd /tmp

rpm -i novell-Sentinelha-<Sentinel_version>*.rpm

A.3.5 Cluster Configuration

You must configure the cluster software to register each cluster node as a member of the cluster. As part of this configuration, you can also set up fencing and STONITH resources to ensure cluster consistency.

In our exemplary solution, we basically use the simplest configuration without additional redundancy or other advanced features. We also use a unicast address (instead of the preferred multicast address) because it requires less interaction with network administrators, and is sufficient for testing purposes. We also set up a simple SBD-based fencing resource.

Exemplary Solution:

The exemplary solution will use private IP addresses for internal cluster communications, and will use unicast to minimize the need to request a multicast address from a network administrator. The solution will also use an iSCSI Target configured on the same SUSE Linux VM that hosts the shared storage to serve as an SBD device for fencing purposes. As before, iSCSI devices can be created using any file or block device, but for simplicity here we will use a file that we create for this purpose.

The following configuration steps are very similar to those in Shared Storage Setup:

SBD Setup

Connect to storage03 and start a console session. Use the dd command to create a blank file of any desired size:

dd if=/dev/zero of=/sbd count=1024 bs=1024

In this case, we create a 1MB file filled with zeros (copied from the /dev/zero pseudo-device).

Configure that file as an iSCSI Target:

-

Run YaST from the command line (or use the Graphical User Interface, if preferred): /sbin/yast

-

Select Network Services > iSCSI Target.

-

Click Targets and select the existing target.

-

Select Edit. The UI will present a list of LUNs (drives) that are available.

-

Select Add to add a new LUN.

-

Leave the LUN number as 2. Browse in the Path dialog and select the /sbd file that you created.

-

Leave the other options at their defaults, then select OK then Next, then click Next again to select the default authentication options.

-

Click Finish to exit the configuration. Restart the services if needed. Exit YaST.

NOTE:The following steps require that each cluster node be able to resolve the hostname of all other cluster nodes (the file sync service csync2 will fail if this is not the case). If DNS is not set up or available, add entries for each host to the /etc/hosts file that list each IP and its hostname (as reported by the hostname command).

This procedure should expose an iSCSI Target for the SBD device on the server at IP address 10.0.0.3 (storage03).

Node Configuration

Connect to a cluster node (node01) and open a console:

-

Run YaST.

-

Open Network Services > iSCSI Initiator.

-

Select Connected Targets, then the iSCSI Target you configured above.

-

Select the Log Out option and log out of the Target.

-

Switch to the Discovered Targets tab, select the Target, and log back in to refresh the list of devices (leave the automatic startup option and No Authentication).

-

Select OK to exit the iSCSI Initiator tool.

-

Open System > Partitioner and identify the SBD device as the 1MB IET-VIRTUAL-DISK. It will be listed as /dev/sdd or similar - note which one.

-

Exit YaST.

-

Execute the command ls -l /dev/disk/by-id/ and note the device ID that is linked to the device name you located above.

-

Execute the command sleha-init.

-

When prompted for the network address to bind to, specify the external NIC IP (172.16.0.1).

-

Accept the default multicast address and port. We will override this later.

-

Enter 'y' to enable SBD, then specify /dev/disk/by-id/<device id>, where <device id> is the ID you located above (you can use Tab to auto-complete the path).

-

Complete the wizard and make sure no errors are reported.

-

Start YaST.

-

Select High Availability > Cluster (or just Cluster on some systems).

-

In the box at left, ensure Communication Channels is selected.

-

Tab over to the top line of the configuration, and change the udp selection to udpu (this disables multicast and selects unicast).

-

Select to Add a Member Address and specify this node (172.16.0.1), then repeat and add the other cluster node(s): 172.16.0.2.

-

Select Finish to complete the configuration.

-

Exit YaST.

-

Run the command /etc/rc.d/openais restart to restart the cluster services with the new sync protocol.

Connect to each additional cluster node (node02) and open a console:

-

Run YaST.

-

Open Network Services > iSCSI Initiator.

-

Select Connected Targets, then the iSCSI Target you configured above.

-

Select the Log Out option and log out of the Target.

-

Switch to the Discovered Targets tab, select the Target, and log back in to refresh the list of devices (leave the automatic startup option and No Authentication).

-

Select OK to exit the iSCSI Initiator tool.

-

Run the following command: sleha-join

-

Enter the IP address of the first cluster node.

In some circumstances the cluster communications do not initialize correctly. If the cluster does not start (the openais service fails to start):

-

Manually copy /etc/corosync/corosync.conf from node01 to node02, or run csync2 -x -v on node01, or manually set the cluster up on node02 through YaST.

-

Run /etc/rc.d/openais start on node02

In some cases, the script might fail because the xinetd service does not properly add the new csync2 service. This service is required so that the other node can sync the cluster configuration files down to this node. If you see errors like csync2 run failed, you may have this problem. To fix this, execute: kill -HUP `cat /var/run/xinetd.init.pid and then re-run the sleha-join script.

At this point you should be able to run crm_mon on each cluster node and see that the cluster is running properly. Alternatively you can use 'hawk', the web console - the default login credentials are 'hacluster / linux'.

There are two additional parameters we need to tweak for this example; whether these will apply to a customer's production cluster will depend on its configuration:

-

Set the global cluster option no-quorum-policy to ignore. We do this because we have only a two-node cluster, so any single node failure would break quorum and shut down the entire cluster: crm configure property no-quorum-policy=ignore

NOTE:If your cluster has more than two nodes, do not set this option.

-

Set the global cluster option default-resource-stickiness to 1. This will encourage the resource manager to leave resources running in place rather than move them around:

crm configure property default-resource-stickiness=1.

A.3.6 Resource Configuration

As mentioned in the Cluster Installation, this solution provides an OCF Resource Agent to monitor the core services under SLE HAE, and you can create alternatives if desired. The software also depends on several other resources, for which Resource Agents are provided by default with SLE HAE. If you do not want to use SLE HAE, you need to monitor these additional resources using some other technology:

-

A filesystem resource corresponding to the shared storage that the software uses.

-

An IP address resource corresponding to the virtual IP by which the services will be accessed.

-

The Postgres database software that stores configuration and event metadata.

There are additional resources, such as MongoDB used for Security Intelligence and the ActiveMQ message bus; for now at least these are monitored as part of the core services.

Exemplary Solution

The exemplary solution uses simple versions of the required resources, such as the simple Filesystem Resource Agent. You can choose to use more sophisticated cluster resources like cLVM (a logical-volume version of the filesystem) if required.

The exemplary solution provides a crm script to aid in cluster configuration. The script pulls relevant configuration variables from the unattended setup file generated as part of the Sentinel installation. If you did not generate the setup file, or you wish to change the configuration of the resources, you can edit the script accordingly.

Connect to the original node on which you installed Sentinel (this must be the node on which you ran the full Sentinel install) and do the following (<SHARED1> is the shared volume you created above):

mount /dev/<SHARED1> /var/opt/novell

cd /usr/lib/ocf/resource.d/novell

./install-resources.sh

There might be issues with the new resources coming up in the cluster; run /etc/rc.d/openais restart on node02 if you experience this issue.

The install-resources.sh script will prompt you for a couple values, namely the virtual IP that you would like people to use to access Sentinel and the device name of the shared storage, and then will auto-create the required cluster resources. Note that the script requires the shared volume to already be mounted, and also requires the unattended installation file which was created during Sentinel install to be present (/tmp/install.props). You do not need to run this script on any but the first installed node; all relevant config files will be automatically synced to the other nodes.

If the customer environment varies from this exemplary solution, you can edit the resources.cli file (in the same directory) and modify the primitives definitions from there. For example, the exemplary solution uses a simple Filesystem resource; you may wish to use a more cluster-aware cLVM resource.

After running the shell script, you can issue a crm status command and the output should look like this:

crm status

Last updated: Thu Jul 26 16:34:34 2012 Last change: Thu Jul 26 16:28:52 2012 by hacluster via crmd on node01 Stack: openais Current DC: node01 - partition with quorum Version: 1.1.6-b988976485d15cb702c9307df55512d323831a5e 2 Nodes configured, 2 expected votes 5 Resources configured.

Online: [ node01, node02 ]

stonith-sbd (stonith:external/sbd): Started node01

Resource Group: sentinelgrp

sentinelip (ocf::heartbeat:IPaddr2): Started node01

sentinelfs (ocf::heartbeat:Filesystem): Started node01

sentineldb (ocf::novell:pgsql): Started node01

sentinelserver (ocf::novell:sentinel): Started node01

At this point the relevant Sentinel resources should be configured in the cluster. You can examine how they are configured and grouped in the cluster management tool, for example by running crm status.

A.3.7 Secondary Storage Configuration

As the final step in this process, configure secondary storage so that Sentinel can migrate event partitions to less-expensive storage. This is optional, and in fact the secondary storage need not be made highly-available in the same way that the rest of the system has been - you can use any directory (mounted from a SAN or not), or NFS or CIFS volume.

In the Sentinel web console, in the top menu bar, click Storage, then select Configuration, then select one of the radio buttons under Secondary storage not configured to set this up.

Exemplary Solution

The exemplary solution will use a simple iSCSI Target as a network shared storage location, in much the same configuration as the primary storage. In production implementations, these would likely be different storage technologies.

Use the following procedure to configure the secondary storage for use by Sentinel:

NOTE:Since we will be using an iSCSI Target for this exemplary solution, the target will be mounted as a directory for use as secondary storage. Hence we will need to configure the mount as a filesystem resource akin to the way the primary storage filesystem is configured. This was not automatically set up as part of the resource installation script since there are other possible variations; we will do the configuration manually here.

-

Review the steps above to determine which partition was created for use as secondary storage (/dev/<NETWORK1>, or something like /dev/sdc1). If necessary create an empty directory on which the partition can be mounted (such as /var/opt/netdata).

-

Set up the network filesystem as a cluster resource: use the web console or run the command:

crm configure primitive sentinelnetfs ocf:heartbeat:Filesystem params device="/dev/<NETWORK1>" directory="<PATH>" fstype="ext3" op monitor interval=60s

where /dev/<NETWORK1> is the partition that was created in the Shared Storage Setup section above, and <PATH> is any local directory on which it can be mounted.

-

Add the new resource to the group of managed resources:

crm resource stop sentinelgrp crm configure delete sentinelgrp crm configure group sentinelgrp sentinelip sentinelfs sentinelnetfs sentineldb sentinelserver crm resource start sentinelgrp

-

You can connect to the node currently hosting the resources (use crm status or Hawk) and make sure that the secondary storage is properly mounted (use the mount command).

-

Log in to the Sentinel Web interface.

-

Select Storage, then select Configuration, then select the SAN (locally mounted) under Secondary storage not configured.

-

Type in the path where the secondary storage is mounted, for example /var/opt/netdata.

The exemplary solution uses simple versions of the required resources, such as the simple Filesystem Resource Agent - customers can choose to use more sophisticated cluster resources like cLVM (a logical-volume version of the filesystem) if they wish.