2.3 Policies

Policies are XML-based files that aggregate the resource facts and constraints that are used to control resources. This section includes the following information about policies:

For information about facts, see Section 4.0, Understanding Grid Object Facts and Computed Facts.

2.3.1 Policy Types

Policies are used to enforce quotas, job queuing, resource restrictions, permissions, etc. They can be associated with various grid objects (jobs, users, resources, etc.). The policy example below shows a constraint that limits the number of running jobs to a defined value, while exempting certain users from this limit. Jobs started that exceed the limit are queued until the running jobs count decreases and the constraint passes:

<policy>

<constraint type="start" reason="too busy">

<or>

<lt fact="job.instances.active" value="5" />

<eq fact="user.name" value="canary" />

</or>

</constraint>

</policy>

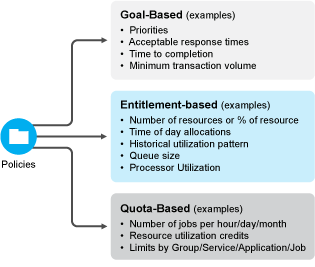

Policies can be based on goals, entitlements, quotas, and other factors, all of which are controlled by jobs.

Figure 2-1 Policy Types and Examples

2.3.2 Job Arguments and Parameter Lists in Policies

Part of a job’s static definition might include job arguments. A job argument defines what values can be passed in when a job is invoked. This allows the developer to statically define and control how a job behaves, while the administrator can modify policy values.

You define job arguments in an XML policy file, which is typically given the same base name as the job. The example job cracker.jdl, for example, has an associated policy file named cracker.policy. The cracker.policy file contains entries for the <jobargs> namespace, as shown in the following partial example from cracker.policy.

<jobargs>

<fact name="cryptpw"

type="String"

description="Password of abc"

value="4B3lzcNG/Yx7E"

/>

<fact name="joblets"

type="Integer"

description="joblets to run"

value="100"

/>

</jobargs>

The above policy defines two facts in the jobargs namespace for the cracker job. One is a String fact named cryptpw with a default value. The second jobargs fact is an integer named joblets. Both of these facts have default values, so they do not require being set on job invocation. If the default value was omitted, then job would require that the two facts be set on job invocation. The job will not start unless all required job argument facts are supplied at job invocation. The default values of job argument facts can be overridden at job invocation. Job arguments are passed to a job when the job is invoked. This is done in one of the following ways:

-

From the zos run command, as shown in the following example:

>zos run cracker cryptpw="dkslsl"

-

From within a JDL job script when invoking a child job, as shown in the following job JDL fragment:

self.runjob("cracker", { "cryptpw" : "asdfa" } ) -

From the Job Scheduler, either with the PlateSpin Orchestrate Development Client or by a .sched file.

When you deploy a job, you can include an XML policy file that defines constraints and facts. Because every job is a Grid object with its own associated set of facts (job.id, etc.), it already has a set of predefined facts, so jobs can also be controlled by changing job arguments at run time.

As a job writer, you define the set of job arguments in the jobargs fact space. Your goal in writing a job is to define the specific elements a job user is permitted to change. These job argument facts are defined in the job’s XML policy for every given job.

The job argument fact values can be passed to a job with any of the following methods used for running a job:.

-

as command-line arguments to the zos run command

-

from the Job Arguments tab of the Job Scheduler in the Development Client

-

from the Server Portal

-

through the runJob() method of the JDL Job class

Consequently, the Orchestrate Server run command passes in the job arguments. Similarly, for the Job Scheduler, you can define which job arguments you want to schedule or run a job. You can also specify job arguments when you use the Server Portal.

For example, in the following quickie.job example the number of joblets allowed to run and the amount of sleep time between running joblets are set by the arguments numJoblets and sleeptime as defined in the policy file for the job. If no job arguments are defined, the client cannot affect the job:

...

# Launch the joblets

numJoblets = self.getFact("jobargs.numJoblets")

print 'Launching ', numJoblets, ' joblets'

self.schedule(quickieJoblet, numJoblets)

class quickieJoblet(Joblet):

def joblet_started_event(self):

sleeptime = self.getFact("jobargs.sleeptime")

time.sleep(sleeptime)

To view the complete example, see quickie.job.

As noted, when running a job, you can pass in a policy to control job behavior. Policy files define additional constraints to the job, such as how a resource might be selected or how the job runs. The policy file is an XML file defined with the .policy extension.

For example, as shown below, you can pass in a policy for the job named quickie, with an additional constraint to limit the chosen resources to those with a Linux OS. Suppose a policy file name linux.policy in the directory named /mypolicies with this content:

<constraint type="resource">

<eq fact="resource.os.family" value="linux" />

</constraint>

To start the quickie job using the additional policy, you would enter the following command:

>zos run quickie --policyfile=/mypolicies/linux.policy

2.3.3 The Role of Policy Constraints in Job Operation

This section includes the following information:

How Constraints Are Used

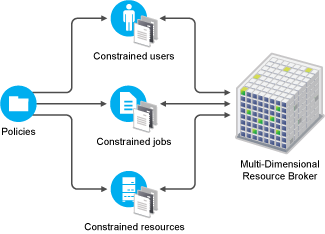

PlateSpin Orchestrate lets you create jobs that meet the infrastructure scheduling and resource management requirements of your data center, as illustrated in the following figure.

Figure 2-2 Multi-Dimensional Resource Scheduling Broker

There are many combinations of constraints and scheduling demands on the system that can be managed by the highly flexible PlateSpin Orchestrate Resource Broker. As shown in the figure below, many object types are managed by the Resource Broker. Resource objects are discovered (see Section 2.4, Resource Discovery). Other object types such as users and jobs can also be managed. All of these object types have “facts” that define their specific attributes and operational characteristics. PlateSpin Orchestrate compares these facts to requirements set by the administrator for a specific data center task. These comparisons are called “constraints.”

Figure 2-3 Policy-Based Resource Management Relying on Various Constraints

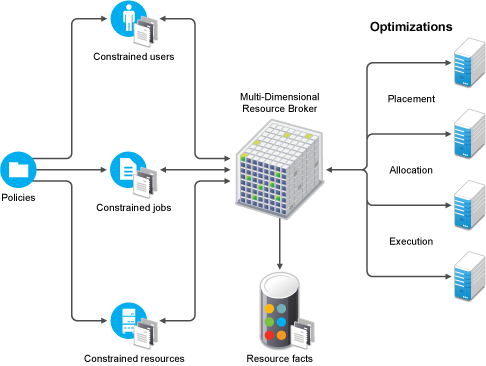

A policy is an XML file that specifies (among other things) constraints and fact values. Policies govern how jobs are dynamically scheduled based on various job constraints. These job constraints are represented in the following figure.

Figure 2-4 Policy-Based Job Management

The Resource Broker allocates or “dynamically schedules” resources based on the runtime requirements of a job (for example, the necessary CPU type, OS type, and so on) rather than allocating a specific machine in the data center to run a job. These requirements are defined inside a job policy and are called “resource constraints.” In simpler terms, in order to run a given job, the Resource Broker looks at the resource constraints defined in a job and then allocates an available resource that can satisfy those constraints.

Constraint Types

The constraint element of a policy can define the selection and allocation of Grid objects (such as resources) in a job. The required type attribute defines the selection type.

The following list explains how constraint types are applied in a job’s life cycle through policies:

-

accept: A job-related constraint used to prevent work from starting; enforces a hard quota on the jobs. If the constraint is violated, the job fails.

-

start: A job-related constraint used to queue up work requests; limits the quantity of jobs or the load on a resource. If the constraint is violated, the job stays queued.

-

continue: A job-related constraint used to cancel jobs; provides special timeout or overrun conditions. If the constraint is violated, the job is canceled.

-

provision: A joblet-related constraint (for resource selection) used to control automatic provisioning.

Provision constraints are used by the Orchestrate Broker as it evaluates VMs or VM templates tthat could be automatically provisioned to satisfy a scheduled joblet. By default, a job’s job.provision.maxcount fact is set to 0, which means no automatic provisioning. If this value is greater than 0 and a joblet cannot be allocated to a physical resource, the provision constraints are evaluated to find a suitable VM or a VM template to provision that also satisfies the allocation and resource constraints.

-

allocation: A joblet-related constraint (for resource selection) used to put jobs in a waiting state when the constraint is violated.

-

resource: A joblet-related constraint (for resource selection) used to select specific resources. The joblet is put in a waiting state if the constraint is violated.

-

vmhost: A VM-related constraint used to define a suitable VM host and repository for VM provisioning.

-

repository: A VM-related constraint used to define a suitable repository for the storage of a VM.

It is possible to create or edit a policy that constrains a repository during VM provisioning; however, a vmhost constraint type must be used, rather than a repository constraint type.

For example:

<constraint type="vmhost"> <eq fact="repository.id" value="XXXX"/> </constraint> -

authorize: A VM-related constraint evaluated before a vmhost or repository constraint.

While allocation constraints are continuously evaluated, the results of resource constraints are cached for a short period of time (30 seconds). This difference allows you as a job writer to separate constraints between those that require an immediate check of a constantly changing fact, and those that require fewer checks because the fact changes infrequently.

For example, a resource’s OS type might be unlikely to change, so a constraint that checks this fact fits in the resource constraint type (the assumption is that resource facts change infrequently, especially if they are used for determining joblet assignment). In contrast, a job instance fact can be changed frequently by a job instance, so a constraint that checks a job instance fact should fit in the allocation constraint type.

All of these constraints are visible and can be tested in the Policy Debugger in the PlateSpin Orchestrate Development Client.

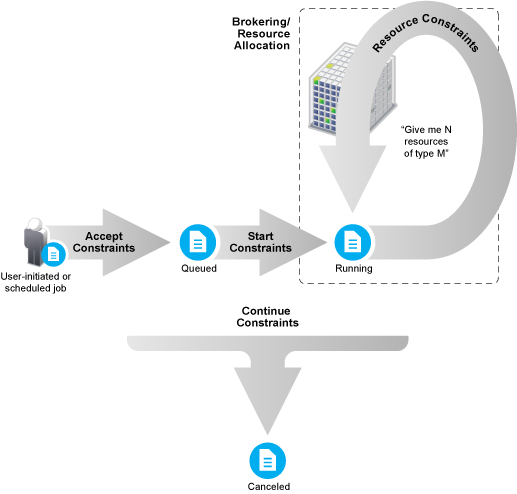

A job’s life cycle as determined by constraints is illustrated in the following figure.

Figure 2-5 Constraint-Based Job State Transition

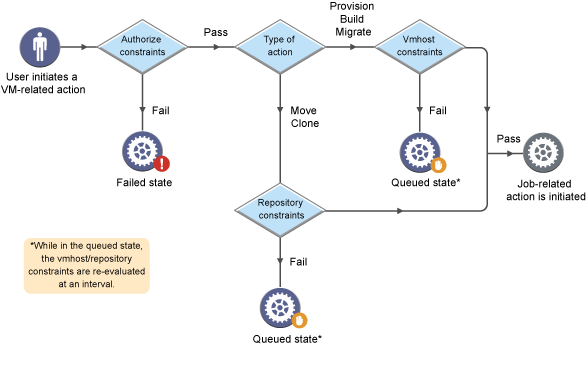

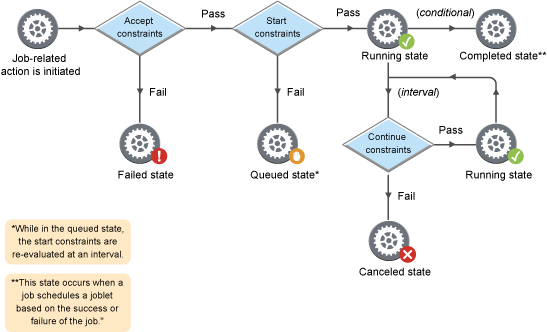

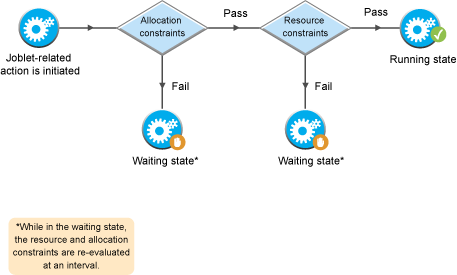

The following three figures provide more detail about the sequence of a job initiated by a user and the constraints it must satisfy before it runs. The diagrams are not intended to represent a finely-detailed flow, with every possible constraint, action, or state, but they do illustrate a high-level constraint workflow.

Figure 2-6 VM-related Constraint Workflow

Figure 2-7 Job-related Constraint Workflow

Figure 2-8 Joblet-related Constraint Workflow

Scheduling with Constraints

The constraint specification of the policies is comprised of a set of logical clauses and operators that compare property names and values. The grid server defines most of these properties, but they can also be arbitrarily extended by the user/developer.

All properties appear in the job context, which is an environment where constraints are evaluated. Compound clauses can be created by logical concatenation of earlier clauses. A rich set of constraints can thus be written in the policies to describe the needs of a particular job.

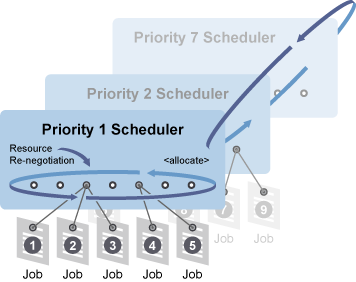

You can also set constraints through the use of deployed policies, and you can use jobs to specify additional constraints that can further restrict a particular job instance. The figure below shows the complete process employed by the Orchestrate Server to constrain and schedule jobs.

When a user issues a work request, the user facts (user.* facts) and job facts (job.* facts) are added to the job context. The server also makes all available resource facts (resource.* facts) visible by reference. This set of properties creates an environment where constraints can be executed.

The Job Scheduling Manager applies a logic ANDing of job constraints (specified in the policies), grid policy constraints (set on the server), optionally additional user-defined constraints specified on job submission, and optional constraints specified by the resources.

This procedure results in a list of matching resources. The PlateSpin Orchestrate solution returns three lists:

-

Available resources

-

Pre-emptable resources (nodes running lower priority jobs that could be suspended)

-

Resources that could be “stolen” (nodes running lower-priority jobs that could be killed)

These lists are then passed to the resource allocation logic where, given the possible resources, the ordered list of desired resources is returned along with minimum acceptable allocation information. The Job Scheduling Manager uses all of this data to appropriate resources for all jobs within the same priority group.

Figure 2-9 Job Scheduling Priority

As the Job Scheduling Manager continually re-evaluates the allocation of resources, it relies on the job policies as part of its real-time algorithm to help provide versatile and powerful job scheduling.

Setting up a constraint for use by the Job Scheduling Manager is accomplished by adding a constraint in the job policy. For example, you might write just a few lines of policy code to describe a job requiring a node with a x86 machine, greater than 512 MB of memory, and a resource allocation strategy of minimizing execution time. Below is an example.

<constraint type=”resource”>

<and>

<eq fact="cpu.architecture" value="x86" />

<gt fact="memory.physical.total" value="512" />

</and>

</constraint>

Constraints Constructed in JDL

Constraints can also be constructed in JDL and in the Java Client SDK. A JDL-constructed constraint can be used for grid search and for scheduling. A Java Client SDK-constructed constraint can only be used for Grid object search.

When you create constraints, it is sometimes useful to access facts on a Grid object that is not in the context of the constraint evaluation. An example scenario would be to sequence the running of jobs triggered by the Job Scheduler.

In this example, you need to make job2 run only when all instances of job1 are complete. To do this, you could add the following start constraint to the job2 definition:

<constraint type="start"> <eq fact="job[job1].instances.active" value="0"/> </constraint>

Here, the job in the context is job2, however the facts on job1 (instances.active) can still be accessed. The general form of the fact name is:

<grid_object_type>[<grid_object_name>].rest.of.fact.space

PlateSpin Orchestrate supports specific Grid object access for the following Grid objects:

-

Jobs

-

Resources (physical or virtual machines)

-

VM hosts (physical machines hosting guest VMs)

-

Virtual Disks

-

Virtual NICs

-

Repositories

-

Virtual Bridges

-

Users

Currently, explicit group access is not supported.

For more detailed information, see the following JDL constraint definitions: