5.13 Advanced Workload Protection Topics

5.13.1 Protecting Windows Clusters

PlateSpin Protect supports the protection of a Microsoft Windows cluster’s business services. The supported clustering technologies are:

-

Windows 2008 R2 Server-based Microsoft Failover Cluster (Node and Disk Majority Quorum and No Majority: Disk Only Quorum models)

-

Windows 2003 R2 Server-based Windows Cluster Server (Single-Quorum Device Cluster model)

NOTE:The Windows cluster management software provides the failover and failback control for the resources running on its cluster nodes. This document refers to this action as a cluster node failover or failback.

The PlateSpin Server provides the failover and failback control for the protected workload that represents the cluster. This document refers to this action as a Platespin failover or failback.

This section includes the following information:

NOTE:For information about rebuilding the Windows 2008R2 Failover Cluster environment after a PlateSpin failover and failback occurs, see Knowledgebase Article 7015576.

Workload Protection

Protection of a cluster is achieved through incremental replications of changes on the active node streamed to a virtual one node cluster, which you can use while troubleshooting the source infrastructure.

The scope of support for cluster migrations is subject to the following conditions:

-

Specify the cluster’s virtual IP address when you perform an Add Workload operation, and not the IP address of a node in the cluster. A cluster’s virtual IP address represents whichever node currently owns the quorum resource of the cluster. If you specify the IP address of an individual node, the node is inventoried as a regular, cluster-unaware Windows workload.

-

A cluster’s quorum resource must be collocated with the cluster’s resource group (service) being protected.

When you use block-based transfer, the block-based driver components are not installed on the cluster nodes. The block-based transfer occurs using a driverless sync with an MD5 based replication. Because the block-based driver is not installed, no reboot is required on the source cluster nodes.

NOTE:File based transfer is not supported for protecting Microsoft Windows clusters.

If a cluster node failover occurs between the incremental replications of a protected cluster and if the new active node’s profile is similar to the failed active node, the protection contract continues as scheduled for the next incremental replication. Otherwise, the next incremental replication command fails. The profiles of cluster nodes are considered similar if:

-

They have the same number of volumes.

-

Each volume is exactly the same size on each node.

-

They have an identical number of network connections.

-

Serial numbers for local volumes (System volume and System Reserved volume) must be the same on each cluster node.

If the local drives on each node of the cluster have different serial numbers, you cannot run an incremental replication after a cluster node failover occurs. For example, during a cluster node failover, the active node Node 1 fails, and the cluster software makes Node 2 the active node. If the local drives on the two nodes have different serial numbers, the next incremental replication command for the workload fails.

There are two supported options for Windows clusters in this scenario:

-

(Recommended) Use the customized Volume Manager utility to change the local volume serial numbers to match each node of the cluster. For more information, see Synchronizing Serial Numbers on Cluster Node Local Storage.

-

(Conditional and Optional) If you see this error:

Volume mappings does not contain source serial number: xxxx-xxxx,

it might have been caused by a change in the active node prior to running the incremental replication. In this case, you can run a full replication to ensure the cluster is once again protected. Incremental replications should function again after the full replication.

NOTE:If you choose not to match the volume serial numbers on each node in the cluster, then a full replication is required after a cluster node failover occurs.

-

If a cluster node failover occurs prior to the completion of the copy process during a full replication or an incremental replication, the command aborts and a message displays indicating that the replication needs to be re-run.

To protect a Windows cluster, follow the normal workload protection workflow (see Basic Workflow for Workload Protection and Recovery).

PlateSpin Failover

When the PlateSpin failover operation is complete and the virtual one-node cluster comes online, you see a multi-node cluster with one active node (all other nodes are unavailable).

To perform a PlateSpin failover (or to test the PlateSpin failover on) a Windows Cluster, the cluster must be able to connect to a domain controller. To leverage the test failover functionality, you need to protect the domain controller along with the cluster. During the test, bring up the domain controller, followed by the Windows Cluster workload (on an isolated network.)

PlateSpin Failback

A PlateSpin failback operation requires a full replication for Windows Cluster workloads.

If you configure the PlateSpin failback as a full replication to a physical target, you can use one of these methods:

-

Map all disks on the PlateSpin virtual one-node cluster to a single local disk on the failback target.

-

Add another disk (Disk 2) to the physical failback machine. You can then configure the PlateSpin failback operation to restore the failover's system volume to Disk 1 and the failover's additional disks (previous shared disks) to Disk 2. This allows the system disk to be restored to the same size storage disk as the original source.

NOTE:For information about rebuilding the Windows 2008R2 Failover Cluster environment after a PlateSpin failover and failback occurs, see Knowledgebase Article 7015576.

After a PlateSpin failback is complete, you can rejoin other nodes to the newly restored cluster.

5.13.2 Using Workload Protection Features through the PlateSpin Protect Web Services API

You can use workload protection functionality programmatically through the protectionservices API from within your applications. You can use any programming or scripting language that supports an HTTP client and JSON serialization framework.

https://<hostname | IP_address>/protectionservices

Replace <hostname | IP_address> with the hostname or the IP address of your PlateSpin Server host. If SSL is not enabled, use http in the URI.

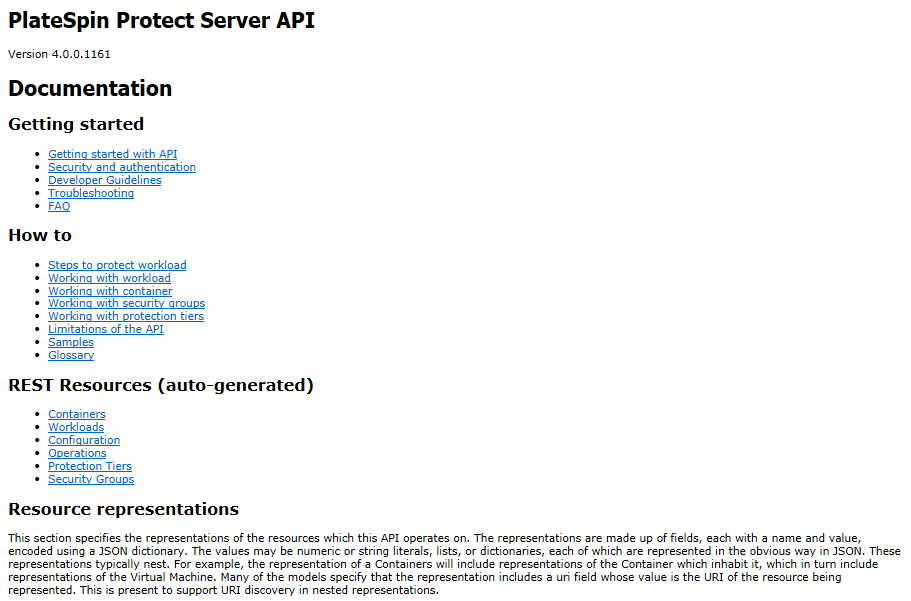

Figure 5-4 The Front Page of the Protect Server API

To script common workload protection operations, use the referenced samples written in Python as guidance. A Microsoft Silverlight application, along with its source code, is also provided for reference purposes.

API Overview

PlateSpin Protect exposes a REST-based API technology preview that developers can use as they build their own applications to work with the product. The API includes information about the following operations:

-

discover containers

-

discover workloads

-

configure protection

-

run replications, failover operations and failback

-

query for status of workload and container status

-

query for status of running operations

-

query security groups and their protection ties

Protect administrators can leverage a Jscript sample (https://localhost/protectionservices/Documentation/Samples/protect.js) from the command line to access the product through the API. The sample can help you write scripts to help you work with the product. Using the command line utility, you can perform the following operations:

-

add a single workload

-

add a single container

-

run the replication, failover, and failback operations

-

add multiple workloads and containers at one time

NOTE:For more information about this operation, see the API documentation at https://localhost/protectionservices/Documentation/AddWorkloadsAndContainersFromCsvFile.htm.

-

remove all workloads at one time

-

remove all container at one time

The PlateSpin Protect REST API home page (https://localhost/protectionservices/ or https://<server page>/protectionservices/) includes links to the content that can be useful for developers and administrators.

This technology preview will be fully developed with more features in subsequent releases.