6.1 Availability and Fault Tolerance

Some terms commonly used to describe a system’s maximum availability are: “24 (hours) by 7 (days),” “three-nines,” or “five-nines,” which describe the required percentage availability (99.9% or 99.999%).

High availability conveys the importance of keeping the system up and running as long as possible. High availability typically refers to the running state of an application. For Operations Center, it generally refers to the whole system as an entity that is available.

High availability has costs associated with it. For example, a system that can be down for 20 minutes for unscheduled outages during a week obviously costs less than one that can be down for 20 minutes over an entire month.

High availability is directly connected to fault tolerance. Fault tolerance usually indicates there is a system in place to ensure undisrupted system availability in the event of hardware or software failure. The type of fault tolerance employed determines the impact on availability if a component of the system fails.

The levels of fault tolerance vary. For example, mirrored drives are good; mirrored RAID drives are better; and dual servers with mirrored RAID drives are even better. Each step in improvement has a cost associated with it.

High Availability can be achieved using hardware and/or software. Some software is provided directly by the hardware manufacturer, while other technologies are add‑ons from third parties (hardware and/or software).

To implement high availability and fault tolerance, you should focus on these components:

-

Operations Center server

-

Management systems that integrate with Operations Center

-

Databases used by Operations Center for storing or obtaining data

-

Networking components (LAN and/or WAN)

A configuration that achieves high availability can include:

-

Dual (or more) servers for Operations Center (physical or clustered)

-

Dual (or more) management systems (OpenView, Netcool, etc.; physical or clustered)

-

Potentially dual networking components

Review the following sections for more information on server configurations:

6.1.1 Operations Center server Configurations

The Operations Center server can be configured as either a stand alone server or a clustered server. Each option has an impact on high availability and fault tolerance as follows:

-

Stand alone: Stand alone is the most basic server configuration. Implementing a single Operations Center server on a RAID volume with a UPS is a simple stand alone system that does not meet the goals of high availability or fault tolerance. A software failure, motherboard failure, or network card failure makes the service unavailable to end users. The service continues to be unavailable until the problem is resolved.

-

Clustered: Clustered server configurations refer to multiple servers configured in the same way to provide the same type of service, with a single “front door” component that makes it seem a single server. From the backend, only one server provides the service. If the server fails, a second server begins providing the service. The main benefit is end users do not need to reconfigure or reconnect to the server or service, and it often appears as if the service never failed.

Cluster solutions can be both hardware and software based. Software based solutions typically configure multiple physical servers. Hardware based solutions partition an individual server into multiple servers. Regardless of which method is used, end users only see a single server providing access.

When implementing clustered servers, they can be one of the following:

-

Hot: Indicates that the failover server is up and running with all data and information up-to-date and ready to be used.

-

Warm: Indicates that the failover server is up and running, but all the data is not up-to-date and requires some type of update to occur to synchronize the data or information.

-

Cold: Indicates that the failover server is not running, and does not have any data.

Many clustering systems are Hot/Cold; when the production server process (Hot) stops working, the cluster automatically activates the secondary server process (Cold) to take over.

Hot/Cold indicates that when the active server process stops, the other process starts from the ground up, as if you just booted up the computer. For example, if an application server typically takes 20 minutes to start up and become accessible to end users, then the high availability environment must allow for a minimum 20‑minute outage for any failure. If the high availability requirements are for “five-nines,” but the clustering implementation provides a Hot/Cold implementation, then achieving “five-nines” is at risk, because something like a network card failure can be the cause of the (minimum) 20‑minute outage.

Hot/Warm configurations are faster, but are not transparent to the end users because of the required data synchronization or update time.

Hot/Hot is the best option available, but this option does not guarantee a seamless failover.

6.1.2 Example

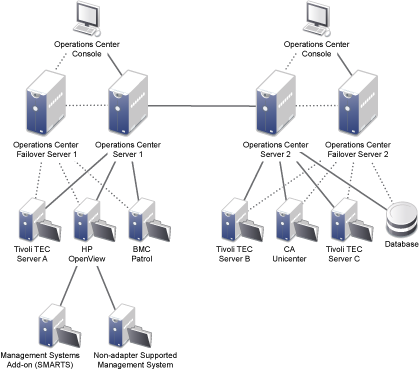

Figure 6-1 illustrates a Operations Center implementation that has high availability and fault tolerance.

Figure 6-1 Operations Center Configuration for High Availability and Fault Tolerance

Users open a URL to operationscenter.myCompany.com, which sends the user to Operations Center server 1 (left side), or in the event of a failure, users are directed to Failover Operations Center server 1. This achieves the first level of fault tolerance and high availability.

Both Operations Center servers are configured and run at the same time (Hot/Hot) with the same data because of the dual connections to the underlying management systems. The assumption is that the underlying management systems are configured in the same manner.

One Operations Center server can also be configured with dual adapters connected to the primary and backup of each management system.