1.9 Troubleshooting SUSE Xen VM Provisioning Actions

The following sections provide solution to the problems you might encounter while performing provisioning actions on VMs created in SUSE Xen and managed by the Orchestration Server:

Provisioning a Xen VM Does Not Work on the Host Server

[c121] RuntimeError: vmprep: Autoprep of /var/lib/xen/images/min-tmpl-1-2/disk0 failed with return code 1: vmprep: autoprep: /var/adm/mount/vmprep.3f96f60206a2439386d1d80436262d5e: Failed to mount vm image "/var/lib/xen/images/min-tmpl-1-2/disk0": vmmount: No root device found Job 'zosSystem.vmprep.76' terminated because of failure. Reason: Job failed

A VM host cannot provision a VM that has a different file system than the VM host. The currently supported file systems are ext2, ext3, reiserfs, jfs, xfs, vfat, and ntfs.

Typically, Linux kernels autoload the appropriate module to do the work.

You must manually load the proper kernel module on the VM host to support the VM’s file system.

For example, if the VM host uses ext3 and the VM image uses reiserfs, load the proper kernel module onto the VM host to support the VM image’s reiserfs file system. Then, on the VM host, run:

modprobe reiserfs

Next, provision the VM.

NOTE:Cloning with prep is limited to what the Virtual Center of VMware Server supports.

Multiple Instances of the Same Xen VM Running when Located on Shared Storage

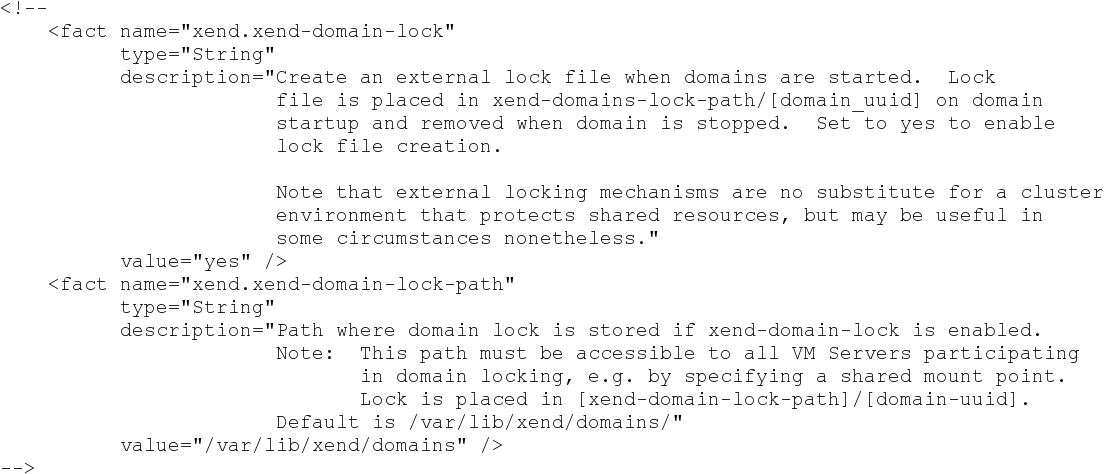

Uncomment these facts in xendConfig.policy:

To uncomment a section of code, remove the “<!--” (comment open) tag and the “-->” (comment close) tag. Edit the xend-domain-lock-path fact to set an alternate location on shared storage that is available to all VM hosts.

When you make the changes and save the file, the facts become active and the VM locking parameters of each newly joining VM host are adjusted accordingly.

You can also schedule an immediate run of the xendConfig job to adjust all configuration files of the Xen VM hosts that are already connected to the Orchestration Server.

NOTE:Setting the lock path by using the Orchestration Server only supports the scenario where all VM hosts have the domain lock path connected to the same shared repository. For more complex setups, you need to use alternative methods to adjust the VM host lock configurations.

Running xm Commands on an Old Xen VM Host Causes Server to Hang

You should have the SLES 11 Xen maintenance release #1 (or later) of the tools:

Xen 3.3.1_18546_14