A.6 Monitoring Applications in an Active/Active Cluster

In an active/active cluster environment, the servers in MSCS work independently of each other, each carrying its own share of processing load. If failure occurs on the hardware, operating system, or services on one of the independent servers, the failed server’s processes are absorbed by the other cluster nodes.

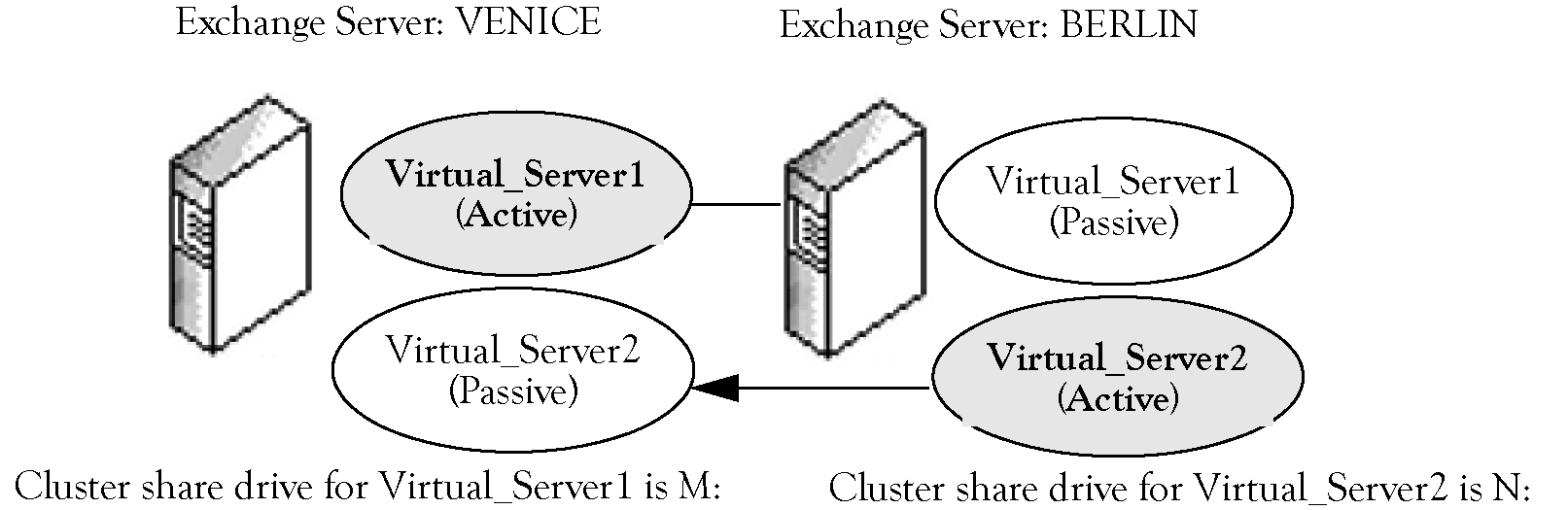

For example, assume a relatively simple configuration with two Exchange 2003 servers with one virtual server instance that runs on each node. If failure occurs on either node, messaging services are automatically transferred to the backup node, which then handles all messaging until the failed node becomes available again.

For example, if the active virtual server instance on VENICE fails, the messaging services are transferred to the backup node BERLIN. Because the node BERLIN is now handling the messaging for both Virtual_Server1 and Virtual_Server2, the processing load is potentially much heavier than in an active/passive configuration, but messaging continues uninterrupted.

NOTE:Although the example assumes an Exchange cluster, most of the information can be applied to any active/active cluster configuration. Similarly, this example illustrates a simple configuration, with two physical nodes and two virtual servers. If your cluster has additional nodes and server instances, you can follow the basic strategy presented here, but configuring it to handle the additional nodes and instances is a bit more complex.

For AppManager to continue application-level monitoring when a virtual server fails over from one node to another, you need to configure the AMAdmin_SetResDependency Knowledge Script to identify when jobs should be active on each server in the Microsoft Cluster Server.

A.6.1 Setting the Resource Dependency for an Active/Active Cluster

In order for AppManager to transfer application monitoring between physical nodes if a failover event occurs, you must run each Knowledge Script you want to use on all of the physical nodes that may potentially host a virtual server. Because jobs are placed in standby mode based on the Knowledge Script category and a resource dependency, you need to create custom Knowledge Script categories for each virtual server in the Microsoft Cluster Server.

To create custom Knowledge Script categories and set resource dependency:

-

Select the Knowledge Scripts you want to run and identify the physical nodes and virtual server instances that you will be monitoring. For example, assume you want to run the Exchange_TopNSenders Knowledge Script on an active/active cluster with the two physical nodes:

-

VENICE with the active virtual server instance Virtual_Server1

-

BERLIN with the active virtual server instance Virtual_Server2

Each active virtual server is configured to fail over to the backup node with Virtual_Server1 dependent on drive M: (VENICE owns M:) and Virtual_Server2 dependent on drive N: (BERLIN owns N:)

-

-

In the Operator Console or Control Center, copy and rename each script that you want to run so as to create a unique Knowledge Script category. For example, right-click the Exchange_TopNSenders Knowledge Script and select Copy Knowledge Script. Rename the script so that the category indicates the name of a virtual server:

-

Virtual01_TopNSenders.qml

-

Virtual02_TopNSenders.qml

You need to do this for each Knowledge Script you want to run against the cluster and for each virtual server instance you want to monitor. Essentially each virtual server in the Microsoft Cluster Server needs its own set of Knowledge Scripts to run.

NOTE:Create a unique category and server group for each virtual server being monitored.

-

-

In the TreeView pane, select one of the physical nodes for which you need to set resource dependency.

During discovery, AppManager discovers both the physical nodes and the virtual servers associated with each node. The TreeView will display all the virtual servers associated with each physical node, not just the node where the virtual server is active. For example:

VENICE

-

Virtual_Server1 (active)

-

Virtual_Server2 (inactive)

BERLIN

-

Virtual_Server1 (inactive)

-

Virtual_Server2 (active)

-

-

Run the AMAdmin_SetResDependency Knowledge Script on the physical node you selected in step 3, for example, onto the server VENICE. The SetResDependency Knowledge Script enables you to define the circumstances when jobs should be inactive:

-

In Exchange, a virtual server is only active on one node at a time. If you are monitoring an application that allows multiple virtual servers to be active on the same node at the same time, you need to create additional Knowledge Script copies for each virtual server and carefully identify the resource dependency for each.

-

Inactive Knowledge Scripts are simply in stand-by mode on the backup node, waiting for a particular resource to appear, for example, a logical drive or a service that identifies an active instance. If the resource you specify becomes available because of a failover event, the SetResDependency job changes the Knowledge Scripts in the specified category to active mode, and jobs begin running on the backup node.

-

-

Using the example with the target server of VENICE, set the values in the Properties dialog box as follows:

Parameter

Description

Knowledge Script category

The category you created in step 2. For example, Virtual01 for the active virtual server on VENICE.

Required available resources

The drive that the active virtual server depends upon. For example, the drive M: on which the Virtual_Server1 instance relies.

-

Repeat steps 3 through 5 for each physical node in the Microsoft Cluster Server. For example, repeat the steps for the server BERLIN and set the Knowledge Script Properties as follows:

Parameter

Description

Knowledge Script category

The category you created in step 2. For example, Virtual02 for the active virtual server on BERLIN.

Required available resources

The drive that the active virtual server depends upon. For example, the drive N: that the Virtual_Server2 instance relies on.

-

Run all of the Knowledge Scripts you created in step 2 on all physical nodes in MSCS. If you established a server group for the cluster, run the jobs on the server group. For example, run Virtual01_TopNSenders and Virtual02_TopNSenders on both the VENICE and BERLIN nodes.

In the Operator Console, the job status is displayed as Running/Active or Running/Inactive, with Virtual01 scripts active on VENICE and inactive on BERLIN and Virtual02-related scripts active on BERLIN and inactive on VENICE.

If the virtual server Virtual_Server2 fails, the Exchange processes for Virtual_Server2 will fail over from BERLIN to VENICE. The server VENICE takes ownership of drive N: and AppManager recognizes this change through the SetResDependency script.

Upon failover, the status in the Job pane changes so that for the server VENICE:

-

Virtual01_TopNSenders job would be Running/Active

-

Virtual02_TopNSenders job would be Running/Active

And on BERLIN, the job status for both Virtual01_TopNSenders and Virtual02_TopNSenders is Running/Inactive.

Monitoring, automated actions, and data collection continue from the new physical node without interruption. Because data stream information is associated with the physical node, not the virtual server, graph data may appear disjointed.